当可持续遇到高密度制冷挑战:When、Where、How

Sustainably Meeting High Density Cooling Challenges When, Where, and How

By Julius Neudorfer,DCEP

2021年12月

译 者 说

零耗水水冷系统、自重力冷却…这篇白皮书涵盖了从IT设备散热基础知识到在高密机柜的现实下实现零水、零碳可持续发展的各种黑科技,十分具有学习价值。

引言

Introduction

在过去的20年里,数据中心见证了IT设备功率密度的持续增长。 在过去的五年中,CPU、GPU、其它处理器和其它ITE组件(如内存)的功率需求呈指数级增长。 对于IT设备制造商、数据中心设计师和运营商来说,管理这种加剧的热负荷变得更具挑战性。

Data centers have been seeing a continuous increase in IT equipment power densities over the past 20 years. The past five years have brought a near exponential rise in power requirements of CPU, GPU, other processors, and other ITE components such as memory. Managing this intensified heat load has become more challenging for IT equipment manufacturers, as well as data center designers and operators.

现在主流的标准1U多处理器服务器的功率密度通常在300到500瓦之间(有些型号可以达到1000瓦)。 当每个机柜堆叠40个时,需要12- 20kw的功率。 对于装载多个刀片服务器的机架也是如此。

The power density for mainstream off-the-shelf 1U servers with multi-processors now typically range from 300 to 500 watts (some models can reach 1000 watts). When stacked 40 per cabinet, they can demand 12-20 kW. The same is true for racks loaded with multiple blade servers.

事实已经证明,在这种功率密度下旧设备的冷却系统已经不再适用,而且这对那些五年前设计的数据中心是一个挑战。即使是许多较新的数据中心也只能通过各种变通方法容纳一些这种密度水平的机柜,他们已经意识到这将影响他们的冷却能源效率。 此外,对更强大的人工智能(AI)和机器学习(ML)计算的需求将继续推动功率和密度水平的提高。 处理器制造商已经制定了CPU和GPU的产品路线图,预计未来几年每个处理器的功率将超过500瓦。

Cooling at this power density has already proven nearly impossible for older facilities and is challenging to some data centers designed and built only five years ago. Even many newer data centers can only accommodate some cabinets at this density level by various workarounds, and they have realized that this impacts their cooling energy efficiency. Moreover, the demand for more powerful computing for artificial intelligence (AI) and machine learning (ML) will continue to drive power and density levels higher. Processor manufacturers have product roadmaps for CPUs and GPUs expected to exceed 500 watts per processor in the next few years.

世界正试图通过解决核心的可持续性问题来减缓气候变化。 对于数据中心来说,能源效率是可持续发展的一个重要因素; 然而,能源使用并不是唯一的因素。 今天,许多数据中心仍使用大量的水来冷却。

The world is trying to mitigate climate change by addressing core sustainability issues. For data centers, energy efficiency is an important element of sustainability; however, energy usage is not the only factor. Today, many data centers use a significant amount of water for cooling.

这篇论文将研究可持续地支持高密度冷却的问题和潜在的解决方案,同时减少能源使用和最大限度地减少或消除水的消耗。

This paper will examine the issues and potential solutions to efficiently and sustainably supporting High Density Cooling while reducing energy usage and minimizing or eliminating water consumption.

IT设备的热管理面临的挑战

IT Equipment Heat Removal Challenges

什么是热管理,它跟冷却相比有什么不同(免费或是其它)?虽然看起来像是文字游戏,但是本质上,在设计方法与技术层面有很大的区别。总的来说,我们传统的思路是通过机械来冷却机柜。这个过程需要能量来驱动冷却系统机械压缩机的电机(实际上,它是一个热泵,因为它将系统一边的热量转移到另一边)。将芯片散发的热量传递到外部散热片是端到端热管理效率和能源效率的关键。

IT Equipment Thermal Management What is thermal management and how is it different than cooling (free or otherwise)? While it may seem like semantics, there is an important difference between a design approach and a technical level. Generally speaking, we have traditionally “cooled” the data center by means of so-called “mechanical” cooling. This process requires energy to drive a motor for the mechanical compressor which drives the system (in reality, it is a “heat pump” since it transfers the heat from one side of the system to another). Getting the heat from the chip to the external heat rejection is the key to end-to-end thermal management effectiveness and energy efficiency.

传统主流数据中心使用风冷IT设备(ITE)。 然而,IT设备的功率密度已经显著上升,使用传统周边冷却系统,要有效地、高效地冷却每个机柜超过20kw的IT设备变得更加困难。 尽管改善了气流管理,如冷或热通道密封系统,有助于提高机房场地内IT热管理的有效性,但仍需要大量的风扇能量用于设备冷却单元和ITE内部风扇。 也有紧密耦合的冷却系统可以更有效地支持更高的功率密度,如机架后门热交换器和基于排的冷却单元。

Traditional mainstream data centers use air-cooled IT equipment (ITE). However, the power density of IT equipment has risen so significantly that it has become more difficult to effectively and efficiently cool IT equipment beyond 20 kW per cabinet using traditional perimeter cooling systems. While improved airflow management, such as cold or hot aisle containment systems, has helped improve the effectiveness of the IT thermal management within the whitespace, it still requires a significant amount of fan energy for the facility cooling units and the ITE internal fans. There are also close coupled cooling systems, such as rear-door heat exchangers and row-based cooling units, which can support higher power densities more effectively.

机房白地板区风冷

Air Cooling of Whitespace

目前正在设计和建造的主流数据中心,尽管IT设备不断提高其整体能源效率(即,功率消耗与性能),但总的功率消耗已经大大增加。这导致了白地板区的平均瓦特每平方英尺,从100W/平方英尺到200-300W/平方英尺甚至更高。虽然这种平均功率密度可以使用有条件的方法来冷却,例如带有周边冷却单元的活动地板,但它每年都成为一个更大的挑战。更大的挑战始于处理器级别,并通过IT设备内部的传热过程,最终影响机架的功率密度。

Although IT equipment has continuously improved its overall energy efficiency (i.e., power consumed vs. performance), the total power draw has increased tremendously. This has resulted in a rise in average watts per square foot in the whitespace, going from under 100 watts per square foot to 200-300 W/Sf or even higher, for mainstream data centers being designed and built today. While this average power density can be cooled using conditional methods, such as raised floor with perimeter cooling units, it becomes a greater challenge every year. The bigger challenge starts at the processor level and moves through the heat transfer process within the IT equipment and eventually impacts the rack power density.

处理器(CPU、GPU、TPU和其它即将问世的设备)和许多其它设备(如内存)的热设计能力(TDP)在过去十年中显著增加。如今,即使是使用风冷散热片的低规格商品和中级服务器的CPU,每台功率也能达到100-150瓦,但大多数处理器都很难达到200瓦。这导致了单个IT设备的功率密度显著且不断增加,以及每个机架的功率密度也增加,导致空白区域每平方英尺功率的总体增加。正如在介绍中提到的,现在主流的标准的多处理器1U服务器的功率密度通常在300到500瓦之间(有些型号可以达到1000瓦)。当每个机柜堆叠40个时,需要12- 20kw的功率。对于装载多个刀片服务器的机架也是如此。

The thermal design power (TDP) of processors (CPUs, GPUs, TPUs, and other upcoming devices) and many other devices such as memory have increased significantly over the past decade. Today even the CPUs using air-cooled heat-sinks in low profile commodity and mid-level servers can range 100-150 watts each, but most have difficulty moving up to the 200 W per processor level. This has resulted in significant and unceasing increase of power density of the individual IT equipment, as well as the power density per rack resulting in an overall rise in watts per square foot in the whitespace. As noted in the introduction, the power density for mainstream off-the-shelf 1U servers with multi-processors now typically ranges from 300 to 500 watts (some models can reach 1000 watts). When stacked 40 per cabinet, they can demand 12-20 kW. The same is true for racks loaded with multiple bladeservers.

了解气流物理学

Understanding Airflow Physics

技术挑战的本质是始于利用空气作为散热截止的基本物理学。 传统的冷却装置是根据进入和离开冷却装置的空气差约20°F(即delta-t或∆T)来设计的。 然而,现代IT设备具有高度可变的delta-t依赖于其运行条件和计算负荷。 这意味着,在正常运行期间,∆T可能在10°F到40°F之间变化。 这本身就造成了气流管理问题,导致许多数据中心出现了热点,而这些热点并不能适应如此大范围的温差变化。 它还限制了每个机架的功率密度。 有一些解决方案使用了各种形式的遏制措施,以尽量减少或缓解这一问题。 理想情况下,这是通过在IT设备和冷却单元之间提供更紧密的耦合来实现的。

The nature of the challenge begins with the basic physics of using air as the medium of heat removal. The traditional cooling unit is designed to operate based on approximately 20°F differential of the air entering the unit and leaving the cooling unit (i.e., delta-t or ∆T). However, modern IT equipment has a highly variable delta-t dependent on its operating conditions as well as its computing load. This means that ∆T may vary from 10°F to 40°F during normal operations. This in itself creates airflow management issues resulting in hotspots for many data centers that are not designed to accommodate this wide range of varying temperature differentials. It also limits the power density per rack. There are solutions using various forms of containment that have been applied to try to minimize or mitigate this issue. Ideally, this is accomplished by providing closer coupling between the IT equipment and the cooling units.

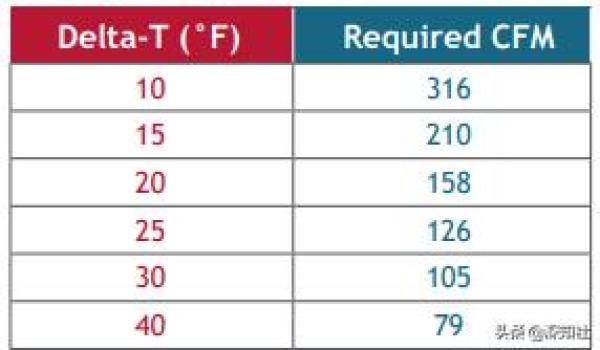

最常见的断裂不只是delta-t,还有它的伴生物; 每千瓦热量所需的气流速率。 这由气流的基本公式(BTU=CFM x 1.08 x∆T°F)表示,它实际上定义了∆T与给定热量单位所需气流之间的逆关系。 例如,它需要158 CFM在一个∆T 20°F传递一千瓦的热量。 相反,在∆T 10°F 时,需要两倍的气流量(316 CFM)。 若考虑在一个变化相对较小的∆T范围内,它反而增加了冷却机架所需的整体设备风扇能量(增加PUE)。 它还增加了It内部能量(这在没有任何计算工作的情况下增加了It负载——因此这也人为地“提高”了设备PUE)。 限制了每个机架的功率密度。

The most common disconnect is not just the delta-t but its companion; the rate of airflow required per kilowatt of heat. This is expressed by the basic formula for airflow (BTU=CFM x 1.08 x ∆T °F), which in effect defines the inverse relationship between ∆T and required airflow for a given unit of heat. For example, it takes 158 CFM at a ∆T of 20°F to transfer one kilowatt of heat. Conversely, it takes twice that amount of airflow (316 CFM) at 10°F ∆T. This is considered a relatively low ∆T, which increases the overall facility fan energy required to cool the rack (increasing PUE). It also increases the IT internal energy (which increases the IT load without any computing work—thus artificially “improving” facility PUE). This also limits the power density per rack.

每千瓦热量转换的气流

Airflow per kilowatt of heat transferred*

*注:为了强调这些例子的重点,我们简化了有关干球温度与湿球温度以及潜在冷却负荷与合理冷却负荷的问题。

*Note: For purposes of these examples, we have simplified the issues related to dry-bulb vs. wet-bulb temperatures and latent vs. sensible cooling loads.

为了克服这些问题,ITE制造高密度服务器,如刀片服务器,只要有可能,就会设计出更高的∆T。 这使得他们可以节省IT风扇的能量,但这也会为冷却单元创造更高的返回温度。 对于大多数用冷却水供应的冷却装置(CRAH)来说,这不是问题; 事实上,它是有益的,因为它改善了给定气流的热量传递到冷却盘管。 然而,对于其它类型的冷却设备,如使用内部制冷剂压缩机的Direct Expansion (DX)机房空调(CRAC),较高的回程温度可能成为一个问题,并迫使压缩机超出指定的最高回程温度。

To overcome these issues ITE manufacturers of higher density servers, such as bladeservers designed to operate at a higher ∆T whenever possible. This allows them to save IT fan energy, but it also can create higher return temperatures for the cooling units. For most cooling units (CRAH) fed from chilled water, this is not an issue; in fact, it is beneficial since it improves the heat transfer to the cooling coil for a given airflow. However, for other types of cooling units, such as a Direct Expansion (DX) Computer Room Air Conditioner (CRAC), which use internal refrigerant compressors, these higher return temperatures can become a problem and stress the compressor beyond the specified maximum return temperatures.

液冷

Liquid Cooling

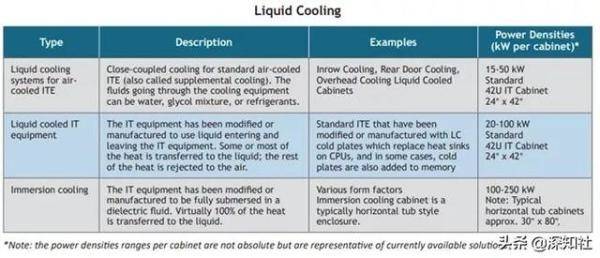

液态冷却(LC)已经成为一个包涵一切的术语,它包含了许多不同的技术和方法来将热量从IT设备转移到环境中。虽然这是一个高度复杂的主题,但为了便于讨论,我们将其简化为几个主要类别,如下表所述。有关液体冷却的更多细节和参考资料,请参阅:绿色网格白皮书WP#70液体冷却技术更新。ASHRAE 2021年白皮书《主流数据中心液体冷却的出现和扩展》,重点关注了数据中心容纳和适应不断增长的功率密度的需求。它还指出,虽然更高功率的处理器是主要的热管理挑战,但由于新服务器中内存的增加,更高密度的功率级别增加了负载。这篇白皮书中讨论了每个处理器(CPU、GPU等)从120-200瓦到600瓦的CPU路线图。这份白皮书报告了一些限制,并指出“对于一些密度较大的风冷服务器来说风扇功率百分比为10%到20%,并不罕见。”

Liquid Cooling (LC) has become a bit of a catchall term that encompasses many different technologies and methodologies to transfer heat from the IT equipment to the environment. While this is a highly complex subject, for purposes of this discussion it is simplified into several major categories, described in the table below. For additional details and references for liquid cooling see: The Green Grid whitepaper WP#70 Liquid-Cooling-Technology-Update. An ASHRAE 2021 whitepaper, Emergence and Expansion of Liquid Cooling in Mainstream Data Centers, focuses on the need for data centers to accommodate and adapt to rising power densities. It also notes that while higher power processors are the primary thermal management challenge, higher density power levels due to an increase of memory DIMMs in new servers add to the load. The whitepaper discusses a CPU roadmap moving from 120-200 watts to 600 watts per processor (CPU, GPU, etc.). This whitepaper reports these limitations and notes that “A fan power percentage of 10% to 20% is not uncommon for some of the denser air-cooled servers.”

绿色电网指标-电力利用效率

The Green Grid Metric – Power Utilization Effectiveness

绿色电网(TGG)在2007年创建了电力利用效率(PUE)指标。 从那时起,它拥有全球公认的设施效率指标,帮助降低了“传统”企业和托管MTDC数据中心的PUE(从2010年的约2.0到2020年的1.4或更少)。2016年,国际标准组织(ISO)采用PUE和其它TGG指标作为关键性能指标(列于ISO/IEC 30134 KPI系列)。 当将PUE作为能源效率的比较标尺时,它是一维的。 尽管如此,其底层的简单性使数据中心操作员能够轻松地计算(或估计)一个设施的PUE,这推动了它的广泛采用。

The Green Grid (TGG) created the Power Utilization Effectiveness (PUE) metric in 2007. Since then, it has the most globally recognized facility efficiency metric that helped drive down PUE for “traditional” enterprise and colocation MTDC data centers (from approximately 2.0 in 2010 to 1.4 or less in 2020). In 2016 the International Standards Organization (ISO) adopted PUE and other TGG metrics as Key Performance Indicators (listed ISO/IEC 30134 KPI series). When examining PUE as an energy efficiency comparison yardstick, it is one-dimensional. Nonetheless, its underlying simplicity allowed data center operators to easily calculate (or estimate) a facility’s PUE, which drove its widespread adoption.

*注:电力密度的数据不是过时的,代表目前的一些解决方案。

不仅仅是PUE指标

Moving beyond PUE

多年来,数据中心生态圈一直引用PUE作为他们“绿色”口号的主要元素。PUE在提高人们的能源意识方面发挥了重要作用,但它只是关于数据中心可持续性更深入讨论的一部分。目前的重点是脱碳和转向100%的可再生能源。虽然可再生能源显然是一个重要因素,但许多数据中心使用水作为外部散热过程的一部分,而不考虑能源来源。

For many years, the data center ecosphere quoted and referenced PUE as the primary element of their “green” mantra. As important as PUE was in raising energy awareness, it is only a part of more in-depth conversations about data center sustainability. The current focus is now on decarbonizing and moving to 100% renewable energy sources. While renewable energy is clearly an important factor, many data centers use water as part of the external heat rejection process, regardless of the energy source.

外部散热系统

External Heat Rejection Systems

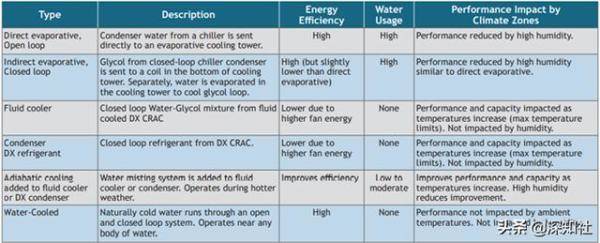

我们一直在讨论数据中心内IT设备的风冷和液冷的区别,液冷也是最近由于功率密度的上升而得到了最广泛关注的技术。然而,各种类型的散热系统也对能源效率和数据中心设施的可持续性有重大影响。

We have been discussing the differences between air cooling and liquid cooling of IT equipment within the data center, which has recently gotten the most attention due to rising power densities. However, the various types of heat rejection systems also have a significant impact on the energy efficiency as well as the sustainability of the data center facility.

一般来说,主流数据中心外部排热系统最常见的几种方法如下表所示。

Generally speaking, the most common methods of mainstream data center external heat rejection systems fall into categories listed in the table below.

水是一种至关重要的全球可持续性资源。据美国环境保护署称,到2024年,至少有40个州将面临水资源短缺,并且认为节约用水是至关重要的。

Water is a critical global sustainability resource. According to the US EPA, at least 40 states are anticipating water shortages by 2024, and consider the need to conserve water is critical.

水资源使用效率(WUE)

Water Usage Effectiveness

2011年,绿色电网开发了用水效率(WUE)指标。虽然许多数据中心的经营者公开测量和报告他们的PUE,但很少有人披露他们的用水量。对于数据中心,PUE度量中不考虑水的消耗。在讨论用水量和WUE时,必须注意两种类型的WUE计算:站点WUE和源头WUE。

The Green Grid developed the Water Usage Effectiveness (WUE) metric in 2011. While many data center operators measure and report their PUEs publicly, very few disclose their water usage. For data centers, the consumption of water is not taken into consideration in the PUE metric. When discussing water consumption and WUE, it is important to note two types of WUE calculations: WUE site and WUE source.

站点WUE——基于数据中心使用的水;表示为IT能源每千瓦时所使用的年化升水(升/IT kWh)。这是最常用的参考,很容易测量。

WUE Site – Based on the water used by the data center; expressed as the annualized liters of water used per kWh of IT Energy (liters/IT kWh). This is the most commonly used reference and is easily measured.

源头WUE-基于能源(发电)的年化用水量,加上现场用水量,表示为IT能源每千瓦时使用的升水。(升/千瓦时)。

WUE Source – Based on the annualized water used by the energy source (power generation), plus the site water usage, expressed as liters of water used per kWh of IT Energy. (liters/IT kWh).

许多人不知道这两种类型的WUE,在大多数情况下,引用WUE只是引用了他们的站点WUE。

Many people are unaware of the two types of WUE, and in most cases, data centers that cite their WUE are only referencing their site.

一般来说,在散热过程中蒸发更多的水通常会减少机械冷却系统所使用的能量。然而,为了更好地理解这一点,所使用的排热类型是一个基于各种因素的设计决策。其中一些决策是基于商业角度的,基于能源成本与可用性和水成本的对比。从技术角度来看,气候对水的使用有重大影响。

Generally speaking, evaporating more water during heat rejection will normally reduce the energy used by mechanical cooling systems. However, in order to put this into perspective, the type of heat rejection used is a design decision, which is based on various factors. Some of those decisions are based on a business perspective, based on the cost of energy vs. the availability and cost of water. From a technical perspective, climate has a significant impact on water usage.

此外,大型和超大规模的数据中心经常使用蒸发冷却解决方案,而且大量站点位于缺水地区。许多出版物和消息来源都注意到了这一点。

Moreover, large-scale and hyperscale data centers often use evaporative cooling solutions, and a significant number of sites are located in waterstressed areas. Multiple publications and sources have noted this.

据弗吉尼亚理工大学2021年6月白皮书《美国数据中心的环境足迹》,“五分之一的数据中心服务器直接水足迹来自中等高度紧张的流域,而近一半的服务器是完全或部分由位于缺水地区电厂供电。”

According to a Virginia Tech June 2021 white paper, The environmental footprint of data centers in the United States, “one-fifth of data center servers direct water footprint comes from moderately to highly water-stressed watersheds, while nearly half of servers are fully or partially powered by power plants located within water-stressed regions.”

根据美国宇航局地球观测站2021年3月的一篇帖子:“美国近一半的地区目前正在经历某种程度的干旱,预计在未来几个月将会恶化。”

According to a NASA Earth Observatory March 2021 post: “Almost half of the United States is currently experiencing some level of drought, and it is expected to worsen in upcoming months.”

气候因素对能源效率和用水的影响

Climate Factors on Energy Efficiency and Water Usage

蒸发冷却的有效性取决于气候条件。虽然这是众所周知的,但它并没有被考虑到WUE指标。然而,ASHRAE 90.4数据中心能源标准已经部分解决了这一问题,该标准在美国有一个基于数据中心所在的气候区域的机械能调整因子表。尽管如此,ASHRAE 90.4并没有在其能源计算中考虑水的使用量。

The effectiveness of evaporative cooling is based on climatic conditions. While this is well known, it was not taken into account in the WUE metric. However, this has been partially addressed in the ASHRAE 90.4 Energy Standard for Data Centers, which in the US has a table of mechanical energy adjustment factors based on the climate zone where the data center is located. Nevertheless, ASHRAE 90.4 does not account for water usage in its energy calculations.

虽然测量场地用水量相对容易,WUE来源指标比较复杂,但有助于提供整体可持续性的更全面的观点。

While it is relatively easy to measure site water usage, the WUE source metric is more complex but helps provide a more holistic view of overall sustainability.

然而,电力生产用水根据燃料来源有很大的不同。因此,它需要对实际供应给数据中心的能源类型有一个清晰的理解,这可能因位置的不同而不同。随着越来越多的数据中心致力于使用可再生能源,计算WUE源需要知道实际用于数据中心水源的用水量。这些信息有时可以从他们的能源提供者那里获得;然而,当通过电力购买协议(PPA)或特别是通过可再生PPA (RPPA)获得能源时,该信息的有效性可能会受到影响。在大多数情况下,RPPA只是纸上交易,而不是实际的可再生能源。PPAs和可再生能源证书(RECs)被许多组织和一些数据中心使用。

However, power production water usage varies widely based on the fuel source. It, therefore, requires a clear understanding of the type of energy source that is actually supplied to a data center, which can differ by location. While more and more data centers are committed to using renewable energy, calculating the WUE source requires knowing the water consumption of the actual source used to power the data center. This information can sometimes be obtained from their energy provider; however, the validity of this information can be muddied when the energy is acquired via a power purchase agreement (PPA) or especially via a Renewable PPA (RPPA). In most cases, a RPPA is simply a paper transaction rather than the actual renewable energy source. PPAs and Renewable Energy Certificates (RECs) are used by many organizations and some data centers.

作为参考,美国能源情报署(EIA)在识别并跟踪电厂发电的用水情况。

For reference, the U.S. Energy Information Administration (EIA) recognizes and tracks water usage by power plant generation.

水处理化学品影响可持续性

Water Treatment Chemicals Impact Sustainability

值得注意的是,除了蒸发所用的水外,冷却塔必须得到适当的维护和清洁,以提高运行效率,并保障健康安全,防止军团菌的生长。这需要对环境可持续性有负面影响的水处理化学品。化学品的使用量随着数据中心的用水和能源消耗而增加。水处理化学品的使用对可持续性有双重影响,既包括设施的使用量,也包括水处理化学品生产过程中使用的化学废物和水。

It is important to note that in addition to the water used by evaporation, cooling towers must be properly maintained and cleaned for operational efficiency, as well as for health safety to prevent legionella growth. This requires water treatment chemicals that have a negative impact on environmental sustainability. The amount of chemicals used increases with water usage and the energy consumption of the data center. The use of water treatment chemicals has a double impact on sustainability, both by the amount used by the facility and the chemical waste and water used in the manufacturing processes of the water treatment chemicals.

碳利用率

Carbon Usage Effectiveness

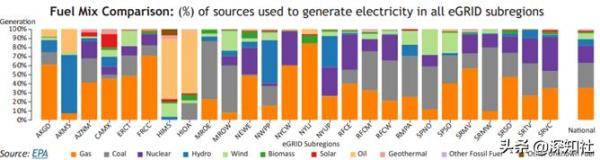

碳利用效率(CUE)是另一个重要的可持续性指标,直接与能源生产来源联系在一起。

Carbon Usage Effectiveness (CUE) is another essential sustainability metric directly tied to the source of energy generation.

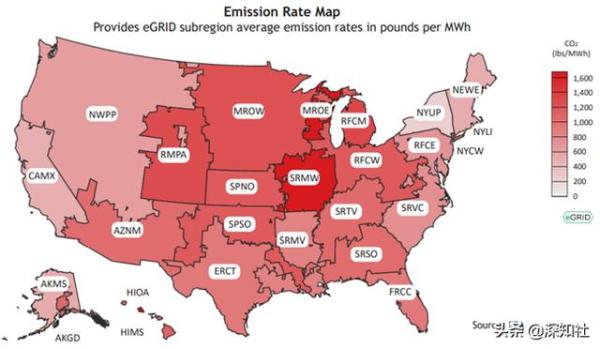

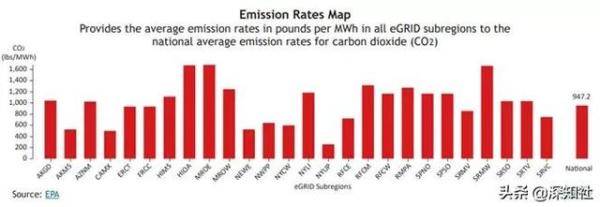

表示为碳资源-以能源(发电)的年化碳排放量为基础,表示IT设备每千瓦时的碳排放量。(公斤/千瓦时)。美国环保署的排放和发电资源综合数据库(eGRID)网站上有一个工具,允许用户输入他们的邮政编码(或选择一个地区)来查看他们的电力文件。

It is expressed as CUE Source – Based on the annualized carbon emitted by the energy source (power generation), expressed as kilograms of carbon emitted per kWh of IT Energy generated. (kg/IT kWh). The EPA’s Emissions & Generation Resource Integrated Database (eGRID) website has a tool that allows users to enter their zip codes (or select a region) to view their power profiles

自然冷却

Free Cooling

自然冷却通常指的是减少或消除机械冷却需求的任何类型的冷却系统(例如,基于压缩机的)。这可以包括直接或间接空气侧经济模式,以及水侧经济模式。水侧经济模式一般是基于一个热交换器,它允许冷却塔的水在较冷的天气中减少水冷式冷水机组的负荷。所有这些系统通常在较冷的天气中节省一定百分比的机械冷却能量。总的来说,对于空气侧和水侧的经济模式来说,自然冷却在较冷的气候中更有效。有许多方法的变化和组合,可以结合起来,以最大限度地提高能源效率和优化季节用水。

Free cooling is typically referred to as any type of cooling system which reduces or eliminates the need for mechanical cooling (i.e., compressor based). This can include direct or indirect air-side economizers, as well as water-side economizers. The water-side economizer is generally based on a heat exchanger which allows cooling tower water to reduce the load on water-cooled chillers during cooler weather. All of these systems generally save a percentage of mechanical cooling energy during cooler weather. Overall, free cooling is more effective in colder climates, for both air-side and water-side economizers. There are many variations and combinations of methodologies that can be combined to maximize energy effectiveness and optimize water usage over the seasons.

虽然水本身不是能量,但它需要能量来处理和输送干净的水。这一点经常被忽视。然而,随着水资源短缺的加剧,加州能源法规已经着手处理这个问题,规范和标准强化机构(CASE)在2022行动中发起-“2022年24号,非住宅计算机房效率”(CA -Title 24)文件,即“用电量与用水量捆绑”。

While water in itself is not energy, it requires energy to process and deliver clean water. This is often overlooked or ignored. However, as water shortages increase, this is being addressed in the California Energy Code: Codes and Standards Enhancement (CASE) Initiative 2022 – “Title 24 2022 Nonresidential Computer Room Efficiency” (CA-Title 24), as “Embedded Electricity in Water.”

另外,废热也可以被排入水中,如湖泊、河流甚至海洋。在很多情况下,这些水体的温度范围可以让它们全年都用来冷却数据中心,而不需要机械冷却,这是一项显著的能源节约。这些节约还可以减少或消除对水源的消耗,以及减少在发电中使用的不可再生能源化石燃料。

Alternately, waste heat can also be rejected into bodies of water, such as lakes and rivers or even the ocean. In many cases, the temperature ranges of those bodies of water are such that they can be used all year round to cool data centers without the need for mechanical cooling, a significant energy savings. These savings can also reduce or eliminate consumption of source water, as well as reducing fossil fuels used in non-renewable power generation.

能源循环利用的有效性

Energy Reuse Effectiveness

对于给定的总能耗,风冷或液冷数据中心产生的总余热实际上是相同的。显然,较低的PUE将减少给定IT负载的总能量消耗。虽然端到端热管理通常指的是“芯片到大气”的热量排放,但它仍然只是浪费热量。其中一个长期的可持续性目标是能够回收和再利用余热。

The total waste heat generated by an air-cooled or a liquid-cooled data center is virtually the same for a given amount of total energy consumed. Clearly, a lower PUE will reduce the total energy consumed for a given IT load. While end-to-end thermal management typically refers to “chip-to-atmosphere” heat rejection, it is still just waste heat. One of the long-term sustainability goals is being able to recover and reuse waste heat.

绿色电网组织在2011年引入了能源再利用效率(ERE)指标,即能源再利用系数(ERF),但影响相对较小。这主要是因为风冷的IT设备排出的余热更难得到有效的回收。

The Green Grid introduced the energy reuse effectiveness (ERE) metric in 2011, energy reuse factor (ERF), with relatively little impact. This was primarily because waste heat from air-cooled ITE being rejected is more difficult to recover effectively.

液体冷却为能源的再利用和回收提供了更有效的机会。其较高的流体操作和返回温度提高了回收部分废热的能力。然而,挑战是多方面的,但随着时间的推移,利益、技术的改进和成本效益将继续推动这一举措。虽然更高的功率密度会带来冷却挑战,但它也提供了多种好处和机会,以提高整体能源效率、IT性能和可持续性。然而挑战是多方面的,但随着时间的推移,利益、技术改进和成本效益将继续推动这一举措。

Liquid Cooling provides a pathway to more effective opportunities for energy reuse and recovery. Its higher fluid operating and return temperatures improve the ability to recover a portion of the waste heat energy. However the challenges are multi-dimensional, but as time progresses the interest, technology improvements and cost effectiveness will continue to drive this initiative. While higher power densities can create cooling challenges, it also offers multiple benefits and opportunities to improve overall energy efficiency, IT performance, and sustainability. However, the challenges are multi-dimensional, but the interest, technology improvements, and cost-effectiveness will continue to drive this initiative as time progresses.

高密度带来节约空间的优点

Higher Density Supports Space Reduction Benefits

将每个机架的功率密度从5-10 kW增加到25-100 kW,可以大幅减少设施的规模。这反过来导致设施使用的材料更少,并减少了建筑材料的浪费。这也减少了机架和配电设备的数量,进一步降低了材料消耗。

The ability to increase power density from 5-10 kW per rack to 25-100 kW per rack offers the opportunity to substantially reduce the facility’s size. This in turn results in far less material being used by the facility and reduced waste material from construction. This also reduces the number of racks and power distribution equipment, further lowering the material consumption.

高密度提高IT设备性能

Higher Density Supports Higher IT Performance

此外,高密度计算也有利于计算系统的整体性能。减少IT和网络设备之间的距离可以降低延迟,这既归因于机架内系统间的连接距离缩短,也由于机架到机架和机架到核心的连接距离缩短,从而提高了整体多系统吞吐量。对于高性能计算(HPC)和人工智能(AI)工作负载来说尤其如此。

Moreover, high-density computing also benefits the overall performance of computing systems. Reducing the distance between IT and network equipment results in lower latency, which is attributable both to shorter intersystem connectivity distances within the rack and improved overall multisystem throughput due to reduced distances for rack-to-rack and rackto-core connectivity. This is especially true for High Performance Computing (HPC) and Artificial Intelligence (AI) workloads.

本质

The Bottom Line

我们正处于数据中心作为关键基础设施和数字经济的一部分,所扮演的可持续性角色的关键时刻。毫无疑问,主流数据中心不会在未来几年内突然变得功能过时,但显然,忽视所有可持续性元素不再是新设计的选择。

We are at a pivotal moment in the sustainability roles that data centers play as part of the critical infrastructure and the digital economy. While there is no question that mainstream data centers will not suddenly become functionally obsolete in the next few years, clearly the recognition that ignoring all the elements of sustainability is no longer an option for new designs.

Nautilus(译者注:Nautilus是美国一家领先的零耗水数据中心制造商,详情可访问nautilusdt.com)水冷数据中心提供了多种热管理技术,可全部或部分应用于水或陆地。它不仅仅是提供的一个概念;它是一个完全运行的设备,能够有效地、高效地在每平方英尺超过800瓦提供高敏度冷却,每个机柜50-100千瓦,不消耗任何水。

The Nautilus water-cooled data center provides an embodiment of several thermal management technologies which can be applied in whole or in part on water or land. It is more than just a proof concept; it is a fully operational colocation facility capable of effectively and efficiently delivering high-density cooling at over 800 watts per square foot and 50-100 kW per cabinet without consuming any water.

在2007年PUE指标问世后,过了几年时间,提高能源效率才成为数据中心运营和新设计的重点之一。花了大概10年,它成为许多具有社会责任精神组织的主要关注对象。这十年来显示数据中心行业及其用户的关注点已经转移到长期可持续性设计和运行的责任,在地理条件给定的情况下,最大化的使用资源。

It took several years after the advent of the PUE metric in 2007 before improving energy efficiency became part of the priorities for data center operation and new designs. And almost ten years before it became a primary concern for many organizations with social responsibility ethos. This decade has shown that the data center industry and its users have moved to the next level of awareness to face the responsibility of long-term sustainable design and operation in a holistic manner to maximize the use of resources and performance advantages for a given geography and climatic conditions.

在决定何时何地投资建设新设施时,数据中心运营商根据业务和客户市场需求做出决策。此外,设计师和工程师在决定建造之前,必须平衡与位置气候区、成本、充足的能源和其它资源(如水)的可用性相关的因素。

When deciding when and where to consider investing in building a new facility, data center operators make decisions based on business and customer market demands. In addition, the designers and engineers have to balance the factors related to location climate zones, cost, and availability of sufficient energy and other resources such as water, before committing to building it.

主要的数据中心运营商和超大规模企业,以及整个行业,都有可持续发展的计划。尽管如此,作为一个企业,他们可能需要在那些从可持续发展的角度来看并不理想,但提供了市场机会和短期投资回报的领域进行建设。然而,全球的投资者和金融专业人士越来越多地将环境、社会和治理(ESG)风险和机遇纳入投资决策过程,这反映了一种观点,即具有可持续实践的公司可能会产生更大的长期回报。

Major data center operators and hyperscalers, as well as the industry, have sustainability initiatives. Nonetheless, as a business, they may need to build in areas that may not be ideal from a sustainability viewpoint, but offers a market opportunity and near-term return on investment. However, investors and financial professionals worldwide are increasingly factoring in environmental, social, and governance (ESG) risks and opportunities to their investment decision-making process, reflecting a view that companies with sustainable practices may generate stronger returns in the long term.

Nautilus公司将开始利用其技术,利用这个机会以可持续的方式重新利用废弃造纸厂的一部分。2021年6月,Nautilus宣布了其陆地设施利用缅因州的地形,利用地势创建一个自重力冷却系统,不仅避免了机械冷却所需的能源成本,而且还减少了泵送能量。

Nautilus will begin using its technology to take advantage of the opportunity to reuse a portion of an abandoned paper mill in a sustainable manner. In June 2021, Nautilus announced its land-based facility that takes advantage of the topography in Maine that taps a reservoir to create a gravity-fed cooling system that not only avoids the cost of energy use for mechanical cooling and it also reduces the pumping energy.

此外,它还可以使用现有的水电站的能源。零排放供应与数据中心的高效节能高密度冷却相结合,大大提高了零排放电力采购的温室气体效益。此外,未来对其排放废热水的有益利用的前景将进一步改善项目对改造场地整体可持续性的利用。

Moreover, it is also able to use energy from an existing hydroelectric power plant operated on the site. The combination of zero-emission supply and the data center’s energy efficient high density cooling substantially enhances the GHG benefit of the zero-emissions power procurement. In addition, future prospects of beneficial uses of its warmed discharge water will produce further improvements of the overall sustainability profile for the project’s utilization of the repurposed site.

位于斯托克顿的Nautilus数据中心验证了该技术的流程和性能。可以想象,这个第一个陆上项目将成为一个参考设计模型。此外,它有望激励未来的高密度数据中心,这些数据中心可以战略地定位,以有效和可持续地优化自然资源,并将对周围生态系统的影响降到最低。

The Nautilus data center in Stockton confirmed the technology processes and performance. Conceivably, this first land-based project will become a reference design model. Moreover, it will hopefully incentivize future high-density data centers, which can strategically be located to efficiently and sustainably optimize natural resources with minimal impact on the surrounding ecosystems.

深 知 社

翻译:

Plato Deng

深知社数据中心高级研究员 /DKV创始成员

公众号声明:

本文仅供读者学习参考,不得用于任何商业用途。未经公众号DeepKnowledge书面授权,请勿转载。